If you’ve tried to pin down the cost of SOC 2 compliance, you’ve probably noticed how slippery the answers are. One source says it’s manageable. Another suggests six figures. Most settle on “it depends” and move on.

The truth is simpler, but less comfortable. SOC 2 isn’t a single expense. It’s a mix of audit fees, internal time, tooling, preparation work, and ongoing effort that shows up long before and long after the auditor signs off. Some costs are obvious. Others quietly pile up in the background and catch teams off guard.

This article breaks down what SOC 2 compliance actually costs in 2026, why the numbers vary so widely, and where companies tend to underestimate the real spend, especially in time, focus, and operational drag.

The Baseline: What Companies Typically Spend In 2026

For most small to mid-sized organizations in 2026, SOC 2 compliance lands somewhere between $30,000 and $150,000 in the first year. That range is wide, but it reflects real differences in approach and maturity.

At a high level:

- Lean startups with simple infrastructure can stay closer to the lower end.

- Growing SaaS companies with multiple systems and customers land in the middle.

- Larger or regulated businesses with complex environments push toward the top.

What matters most is not company size alone, but how much work needs to happen before an auditor can confidently sign off.

Understanding SOC 2 Compliance Cost Components

SOC 2 compliance is not a single expense. It is a layered process made up of audit fees, internal effort, preparation work, tooling, and ongoing maintenance. Some costs are obvious and planned for. Others surface gradually as the process unfolds.

This section breaks down the main cost drivers teams face in 2026, starting with the audit itself and moving through the less visible but often more expensive parts of compliance.

SOC 2 Audit Costs

The audit is the formal attestation and the most visible line item in any SOC 2 budget. In 2026, audit pricing continues to vary widely based on scope, complexity, and auditor reputation.

SOC 2 Type 1 Audit Costs

A SOC 2 Type 1 audit evaluates whether your controls are designed appropriately at a specific point in time. It does not assess how well those controls operate over an extended period.

Typical cost range in 2026: $5,000 to $25,000

Lower-end pricing usually applies to smaller teams, limited scope, and clean documentation. Higher-end pricing reflects broader systems, more evidence requirements, and the use of well-known audit firms.

SOC 2 Type 2 Audit Costs

SOC 2 Type 2 evaluates how controls operate over time, usually across a three to twelve month observation period. This is the report most customers and enterprise buyers expect.

Typical cost range in 2026: $7,000 to $50,000 for the audit itself

While the audit fee is higher, the real increase comes from the sustained internal effort required to maintain controls and evidence throughout the observation window.

Auditor Choice and Why Cheap Audits Can Backfire

Not all SOC 2 auditors are viewed equally by customers. Established firms charge more, but their reports carry more weight during security reviews and procurement processes.

Cheaper audits can be tempting, especially for early-stage companies. The risk is that enterprise customers may question the auditor’s credibility. If that happens, companies often have to repeat the audit with a different firm, effectively paying twice.

In practice:

- Boutique firms can be cost-effective if they are well-regarded

- Big-name firms are expensive but rarely questioned

- Unknown auditors create risk during sales cycles

The value of a SOC 2 report depends heavily on who signed it.

The Hidden Cost Most Teams Underestimate: Internal Time

The largest and least predictable SOC 2 cost is internal effort. This rarely appears in budgets, but it shows up quickly in missed deadlines, slower product delivery, and overloaded teams.

Who Gets Pulled Into SOC 2 Work

SOC 2 is not a security-only exercise. It typically involves engineering, IT, HR, legal, leadership, and customer-facing teams. Someone needs to own the process end to end, often becoming a part-time or full-time coordinator for months.

Realistic Time Investment

For a first SOC 2 cycle in 2026, most teams should expect:

- 100 to 200 hours of internal work at minimum

- Often closer to six months of ongoing effort for Type 2

This is time not spent building product or supporting customers, making it a significant opportunity cost.

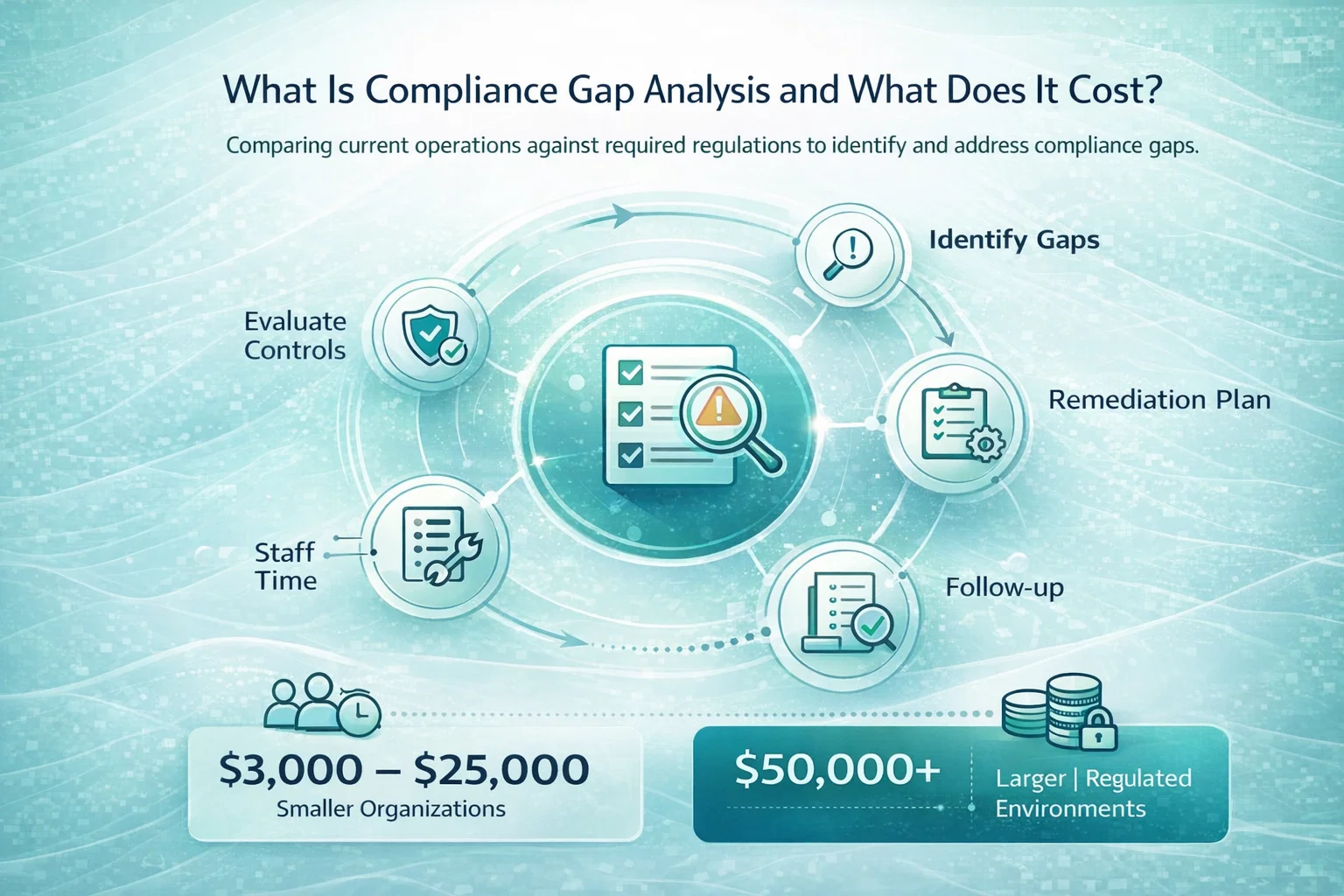

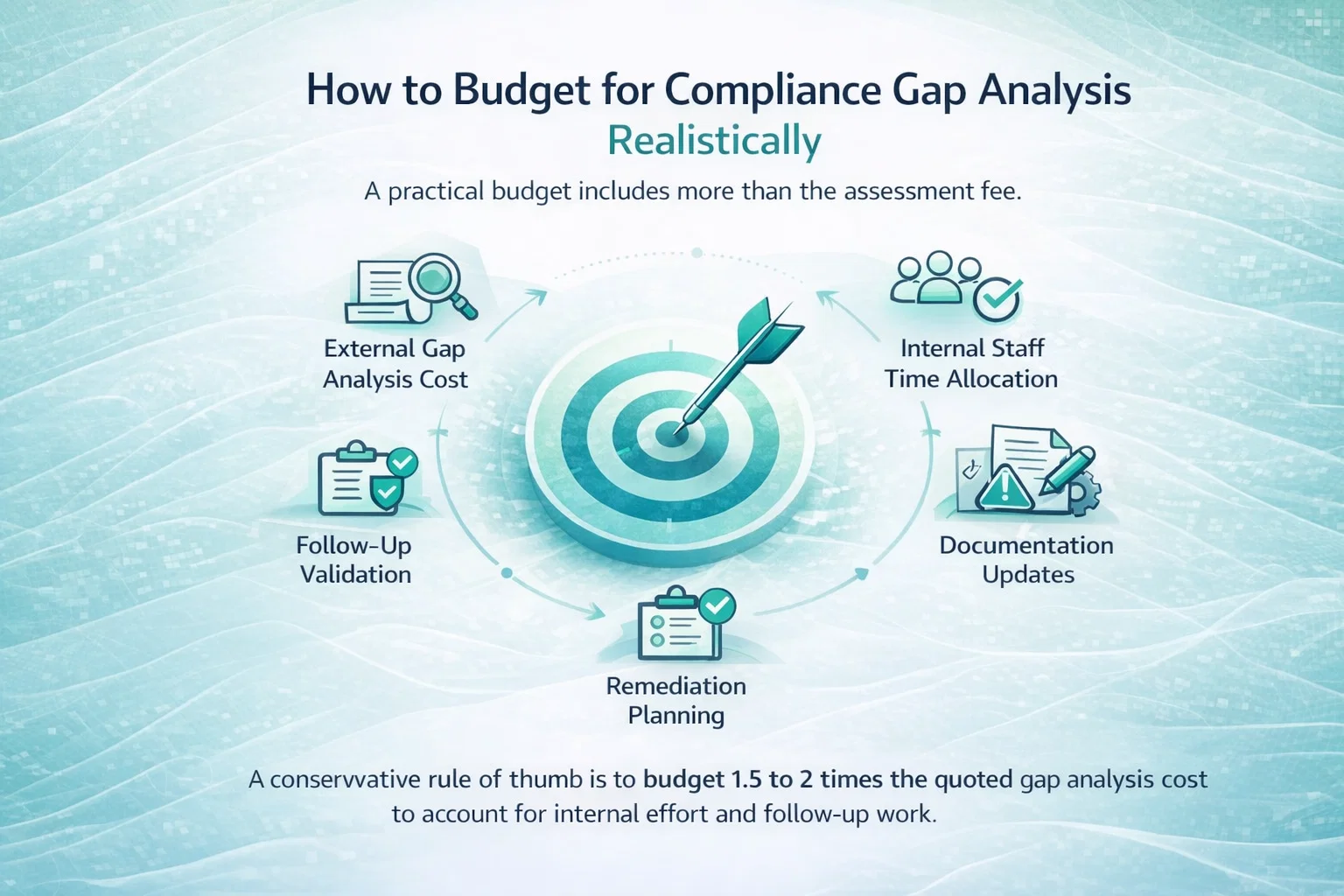

Readiness Assessments and Gap Analysis

Before the audit begins, many companies run a readiness assessment. This structured review helps identify gaps early and reduces the risk of audit surprises.

Typical readiness assessment costs:

- $0 if done internally

- $10,000 to $20,000 if handled by consultants or platforms

While readiness assessments can prevent audit failure, they often uncover remediation work that adds to the overall cost.

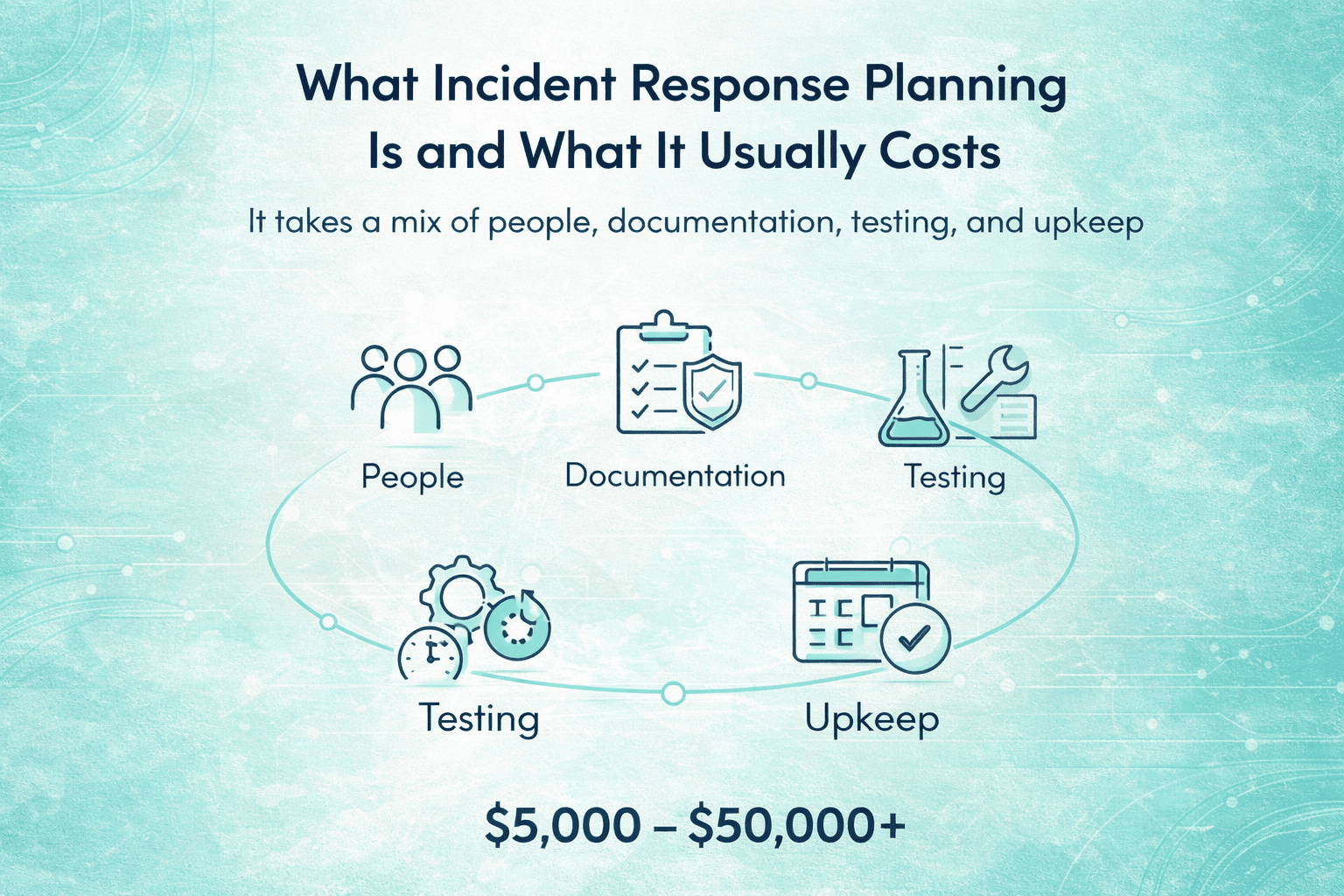

Remediation Costs: Fixing What Is Missing

Once gaps are identified, remediation begins. This is where budgets often stretch beyond initial expectations.

Common remediation areas include:

- Багатофакторна автентифікація

- Centralized logging

- Access reviews

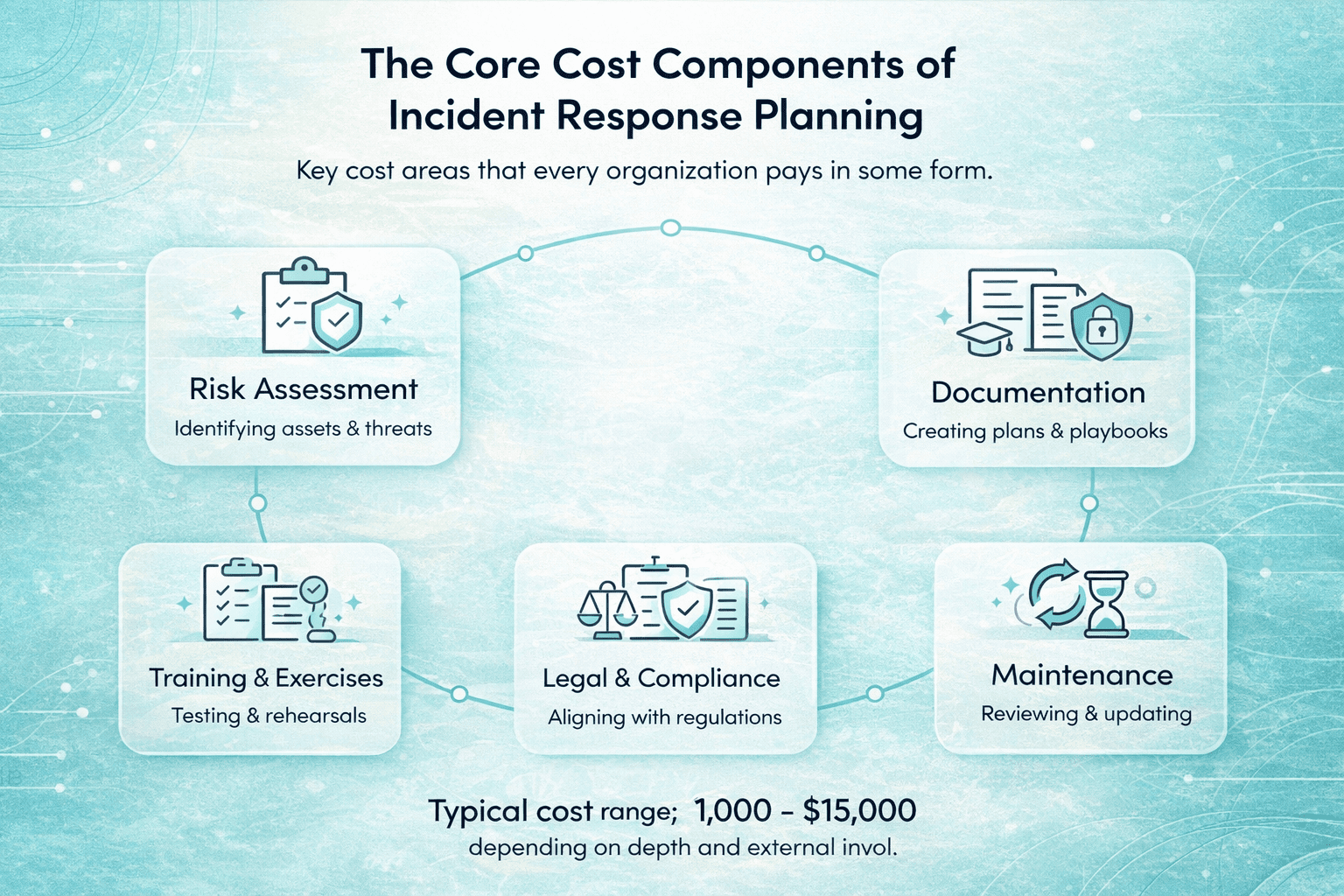

- Incident response procedures

- Vendor risk management

Typical remediation spend in 2026: $5,000 to $30,000 or more

For some teams, remediation is documentation-heavy. For others, it requires real infrastructure changes and new tooling.

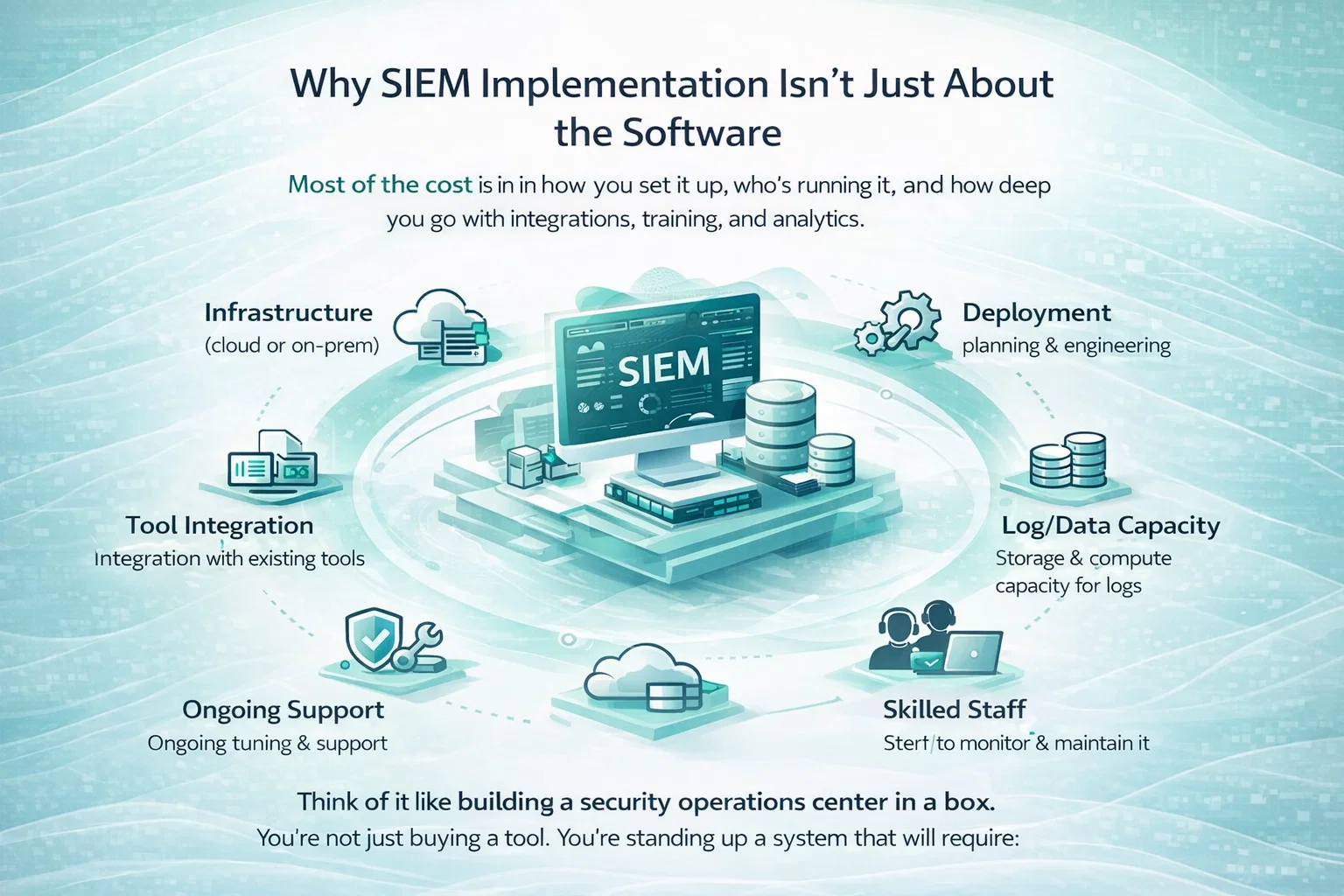

Security Tools and Compliance Platforms

SOC 2 does not mandate specific tools, but many teams adopt them to reduce manual effort and ongoing workload.

Common tooling categories include endpoint management, password managers, vulnerability scanners, evidence collection platforms, and policy management tools.

In 2026:

- Lightweight setups may stay under $10,000 annually

- Fully managed platforms can exceed $30,000 per year

The tradeoff is cost versus time saved and operational consistency.

Legal and Policy Review Costs

SOC 2 requires companies to formalize how data is handled, which often triggers legal review.

Typical legal expenses include reviewing customer contracts, updating internal policies, and aligning HR documentation.

In 2026, legal review typically costs: $5,000 to $15,000

These documents usually need annual updates, making this a recurring expense.

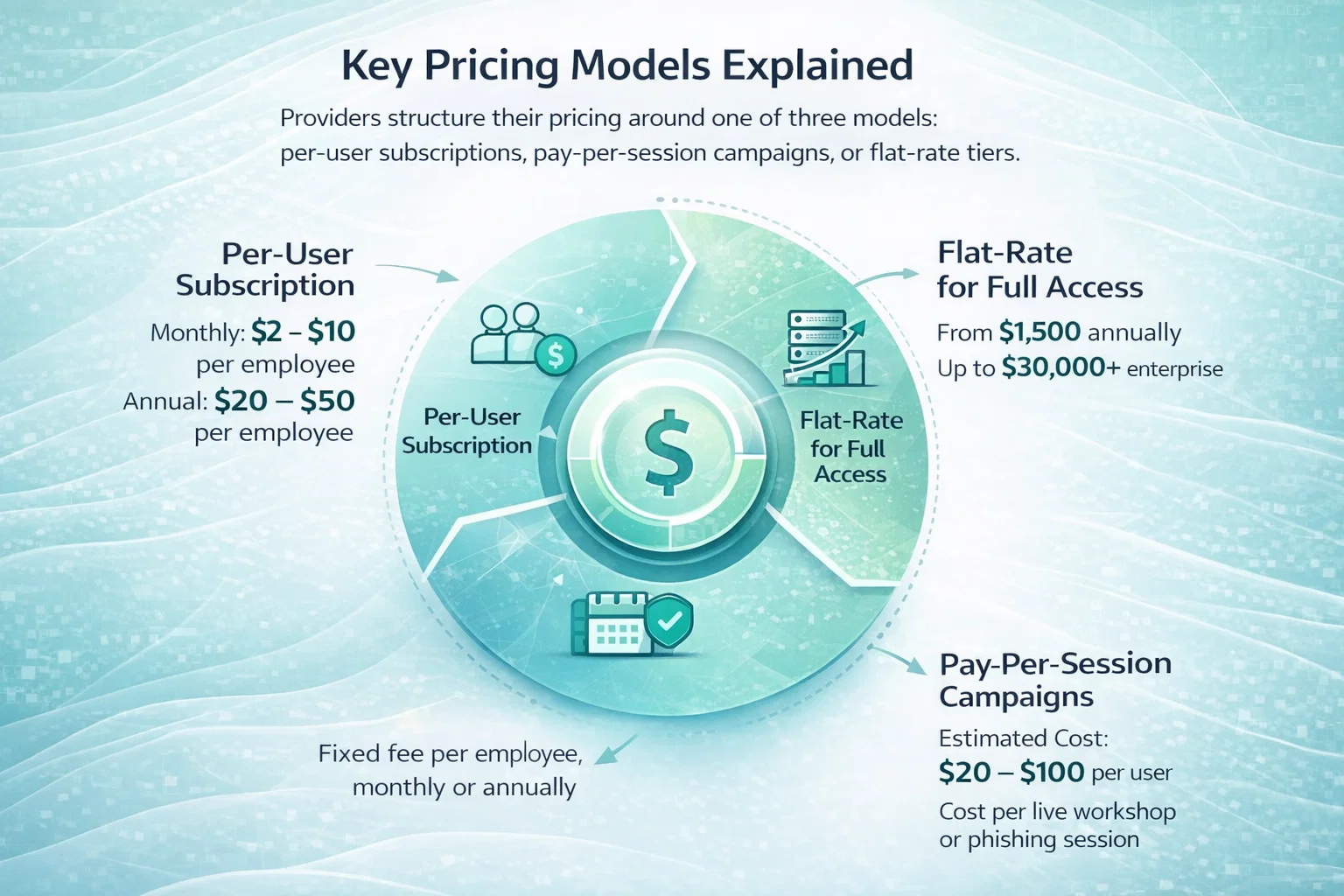

Training and Awareness Costs

Employee security training is a required part of SOC 2. It does not need to be expensive, but it cannot be skipped.

Typical costs include:

- Around $25 per user for basic awareness tools

- Up to $15,000 for instructor-led training sessions

Most small and mid-sized teams can meet requirements using low-cost or bundled options.

Ongoing Maintenance Costs After Certification

SOC 2 does not end when the report is issued. Maintenance is where discipline and process maturity matter most.

Annual maintenance typically costs:

- 30 to 40 percent of the initial compliance spend

- $10,000 to $40,000 per year for most organizations

These costs cover annual audits, monitoring, policy reviews, and evidence upkeep.

How We Help Teams Manage SOC 2 Costs Without Slowing Growth

За адресою Програмне забезпечення списку А, we work with companies that are growing fast but still need control over risk, budgets, and delivery. SOC 2 often becomes part of that conversation not because teams want another framework to manage, but because customers expect a mature security posture. Our role is to help companies build the technical and operational foundation that makes compliance achievable without turning it into a bottleneck.

We focus on strengthening the systems and workflows that SOC 2 actually touches: secure infrastructure, clean access management, reliable monitoring, and development processes that hold up under audit scrutiny. Because we operate as an extension of our clients’ teams, we help align engineering, IT, and security work early, before gaps turn into expensive remediation or last-minute fixes. That upfront clarity is what keeps SOC 2 costs predictable instead of reactive.

With more than 25 years of experience in software development and consulting, we know that compliance works best when it is built into everyday operations. Our teams support cloud and on-premises environments, security-focused development practices, and long-term system stability so that SOC 2 becomes easier to maintain year after year. The result is not just a report for customers, but an environment that supports growth, trust, and delivery without constant rework.

Why Some Companies Overspend On SOC 2

Overspending on SOC 2 usually comes from avoidable decisions rather than strict requirements in the framework itself. In many cases, costs rise because teams try to do too much, too early, or without a clear plan.

Common drivers include:

- Over-scoping Trust Services Criteria. Many companies include multiple Trust Services Criteria that are not actually required by their customers. Each additional criterion increases documentation, testing, and evidence collection, which directly raises audit fees and internal workload.

- Manual evidence collection. Relying on spreadsheets, screenshots, and ad hoc checklists creates a large time burden. Manual collection also increases the risk of missing evidence, which leads to follow-up requests, rework, and longer audit cycles.

- Late remediation. When gaps are discovered late in the process, teams often rush to implement controls under time pressure. This usually results in higher consulting fees, emergency tooling purchases, or inefficient short-term fixes.

- Heavy reliance on consultants. Consultants can help with direction and expertise, but using them for day-to-day execution quickly becomes expensive. Paying external teams to manage evidence, documentation, and coordination often costs more than building minimal internal ownership.

- Buying tools too early without clear needs. Some organizations purchase full compliance platforms or security tools before understanding their actual gaps. This leads to unused features, overlapping tools, and higher subscription costs without proportional time savings.

SOC 2 rewards focus and restraint. Teams that stay deliberate about scope, sequence their work, and match tools to real needs tend to keep costs under control while still meeting compliance expectations.

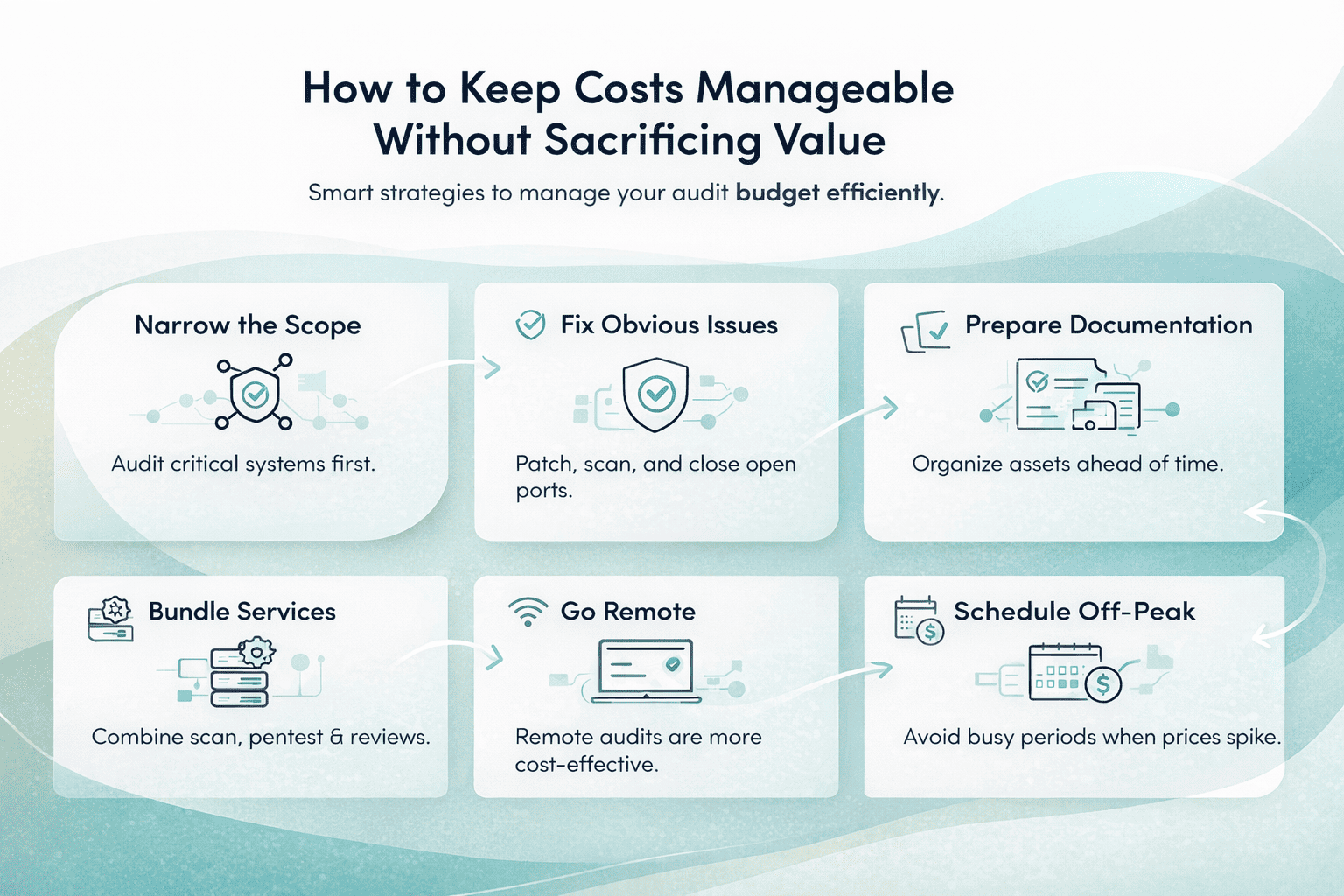

Lean Approaches That Keep SOC 2 Costs Under Control

Some teams manage to keep SOC 2 costs surprisingly low by taking a pragmatic approach from the start. Instead of treating compliance as a massive, one-time project, they focus on what is actually required for their customers and risk profile. That usually means starting with the Security criterion only, keeping the initial scope tight, and using a SOC 2 Type 1 audit as a learning phase before committing to a longer Type 2 cycle.

Lean teams also assign clear ownership early, automate repetitive evidence collection where it makes sense, and avoid over-engineering documentation. Policies are written to reflect how the company actually operates, not how a framework example suggests it should. Lean does not mean careless. It means intentional decisions, steady progress, and building compliance in a way that supports the business instead of slowing it down.

A Realistic First-Year SOC 2 Cost Snapshot

For a typical growing SaaS company in 2026:

- Audit: $15,000 to $40,000

- Internal effort: $20,000 to $60,000 (opportunity cost)

- Tooling: $5,000 to $25,000

- Legal and policies: $5,000 to $10,000

- Remediation and upgrades: $10,000 to $30,000

Total:

- $30,000 to $120,000 depending on maturity and approach

The Long-Term Cost Question: Is SOC 2 Worth It?

SOC 2 is not cheap, and for many teams the upfront cost feels uncomfortable. But the absence of SOC 2 often carries its own price. Sales cycles slow down, security questionnaires multiply, and enterprise prospects hesitate when trust signals are missing. Over time, those delays and lost opportunities can outweigh the direct cost of compliance.

Teams that get the most value from SOC 2 treat it as an operational discipline rather than a one-off requirement. When controls are real, evidence is current, and processes are embedded into daily work, compliance stops feeling like friction. Instead of slowing growth, it removes uncertainty and allows teams to move faster with customers who expect a mature security posture.

Заключні думки

SOC 2 compliance costs in 2026 are not fixed, but they are predictable if you understand where the effort goes. The audit fee is only part of the equation. Time, coordination, and follow-through matter just as much.

Plan conservatively. Scope carefully. Treat SOC 2 as a system you maintain, not a milestone you rush. That mindset alone can save money, time, and frustration.

Поширені запитання

- How much does SOC 2 compliance cost in 2026?

In 2026, most companies spend between $30,000 and $150,000 in the first year of SOC 2 compliance. The final cost depends on audit type, scope, internal effort, tooling, remediation needs, and auditor choice. Smaller teams with simple infrastructure can stay closer to the lower end, while larger or more complex organizations typically spend more.

- What is the difference in cost between SOC 2 Type 1 and Type 2?

SOC 2 Type 1 audits usually cost between $5,000 and $25,000 and assess control design at a single point in time. SOC 2 Type 2 audits are more expensive, typically ranging from $7,000 to $50,000 for the audit alone, because they evaluate how controls operate over several months and require sustained internal effort.

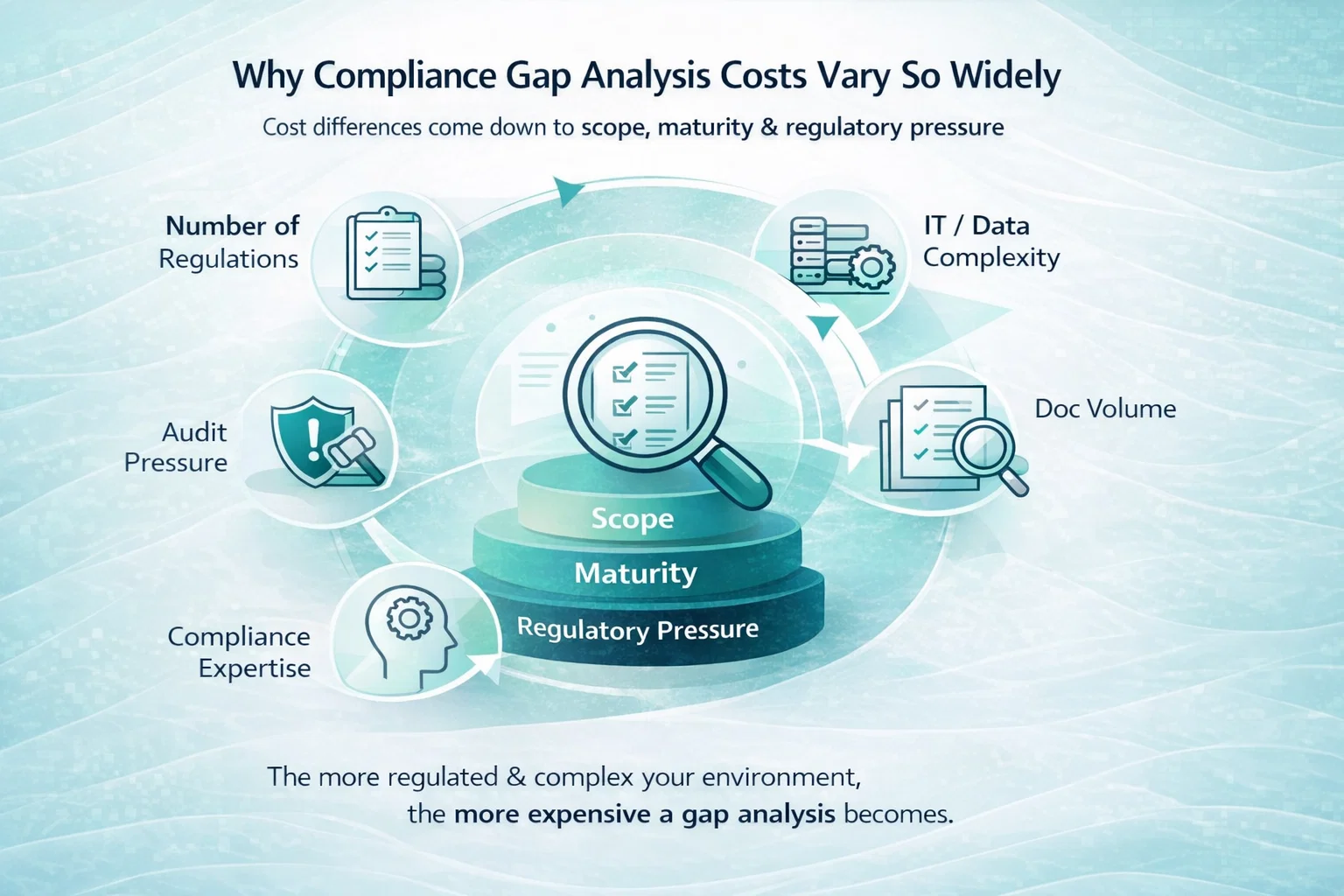

- Why do SOC 2 costs vary so much between companies?

SOC 2 costs vary because there is no fixed scope. Factors such as the number of Trust Services Criteria selected, system complexity, documentation maturity, auditor reputation, and how much work is done internally versus externally all influence the final cost.

- Are audit fees the biggest SOC 2 expense?

Not usually. While audit fees are the most visible cost, internal time is often the largest expense. Engineering, IT, HR, legal, and leadership teams all contribute time, and that opportunity cost is rarely captured in initial budgets.

- Can startups afford SOC 2 compliance?

Yes, but only with a disciplined approach. Startups that keep scope tight, start with Security only, use Type 1 as a learning phase, and avoid unnecessary tooling can manage SOC 2 costs more effectively. Poor planning and over-scoping are what typically make SOC 2 unaffordable for early-stage teams.